A lot of people talk about using AI in machine vision, but few actually practice it.

Perhaps because a successful outcome depends on much more than the machine learning model you use. While the ML model is, of course, a cornerstone, it's only a small piece of the puzzle you need to successfully implement an AI-powered machine vision solution on your production line.

What lies beyond that, and what many tend to overlook, is what we'll cover in this blog post.

One of the challenges of implementing ML is that it requires its own process, its own pipeline. You can't rely on the principles of DevOps, which has a proven process for deploying software code.

This is because machine learning involves both code and data. While the code is developed in a controlled environment, the data - the images from your production line - is constantly changing. So, code and data really exist on two different levels, and the key is to create a controlled connection between them when training and deploying the ML model.

We ensure this with a comprehensive infrastructure around the ML model. The process of implementing an AI-based vision system can be divided into three phases:

- System design

- Implementation and deployment

- Maintenance and continuous improvement

Designing the AI-based vision system

The first phase is about making all the fundamental choices that are crucial to ultimately creating the solution that best solves the task.

This is where we assess whether a quality control task can be solved with traditional computer vision or whether we can benefit from using AI. If we decide that we can benefit from AI, it's a matter of choosing which approach we want to use - is it segmentation, classification, anomaly detection, or a combination?

Watch: [The Vision Lab #19] How to choose the right type of machine learning for your vision project

At the same time, we assess whether we can use standard solutions with smart cameras from Datalogic or Cognex or whether we should build the solution on our own JLI Platform.

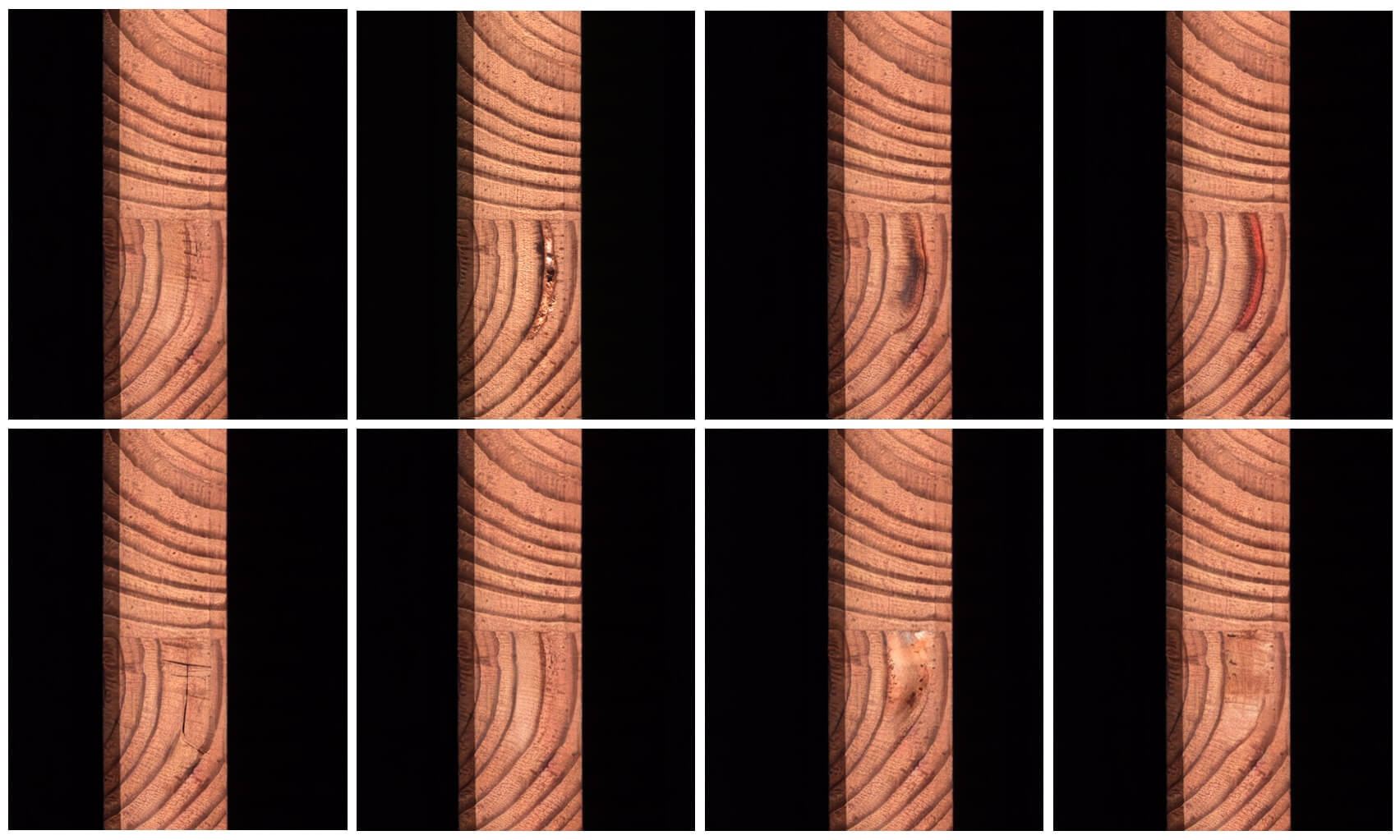

At the same time, we explore the possibilities and limitations of the production environment and define the camera and lighting setup we need to capture images of the defects we are looking for.

This part is essential for the quality of the images and, thus, the data on which the ML model will be trained, and consequently, for the project's success.

Once the complete system has been designed and tested, we take it to the production line for implementation.

Implementing and deploying the ML model

This phase typically starts by focusing on precise calibration and documentation to ensure that the solution can later be scaled to other production sites.

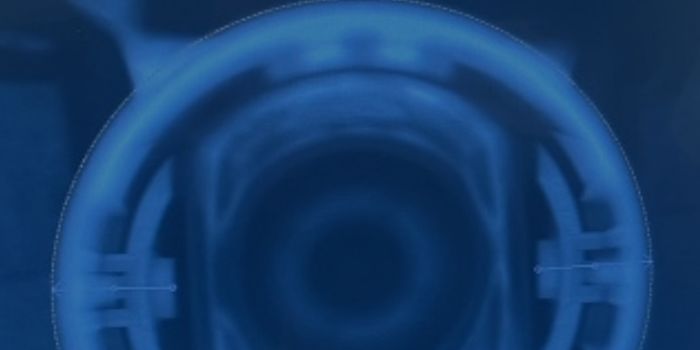

The system captures images on the production line, which are then preprocessed to normalize them and reduce their complexity for faster analysis.

The images are first annotated by a trained super user who knows the ins and outs of the inspected product and has extensive experience with quality control. Ultimately, what we want the machine learning model to do is mimic the evaluation of a skilled operator. After the initial annotation, our vision engineers evaluate the data, make additional annotations, and start building the model.

We use the AI developer platform Weights & Biases to assist in building the model by evaluating training, keeping track of version history, etc.

The model goes through a training loop, and the retrained model is evaluated on the current production. We evaluate the results, annotate more images, retrain the model, and so on until we achieve the desired performance.

Maintenance and continuous improvement of the ML model

Phase 3 is where the vision system is - in principle - done. However, as mentioned at the beginning of this blog post, one of the challenges of creating AI-based vision systems is the fact that the data input is dynamic. The production environment might change over time, changes in materials or production process might lead to different defects than the ones the ML model was initially trained on, etc.

This is why it is important to have a setup for continuously evaluating the results and retraining the model if necessary.

To do this, we implement our JLI Annotator, which allows the operator to annotate the images from the system inline. If a rare defect appears, this could be included in the training material. Also, a dedicated software algorithm is running to monitor all input to the ML model and detect potential input drift. Samples of outside tolerances can be automatically saved for operator annotation in the JLI Annotator.

The annotated images are then passed to offline annotation, where a super user validates or refines the operator's annotations. The annotated images can then be uploaded to our ML server and included in the training set so that the model can be retrained on the new images and adapted to changes or variations in production.

A thought-through process from start to never finishing

As the process shows, training the machine learning model is just a small part of what goes into solving a quality control challenge with AI.

It is just as important to lay out the right foundation from the beginning and ensure that the model has quality images to train on as it is to have efficient processes in place to train and retrain the model.

And for the system to adapt to changes in the production environment or the inspected product, it’s crucial to have a setup for monitoring performance and retraining the model when new defects occur or quality control standards change.

.jpg?width=720&height=405&name=JLI%20AI%20ecosystem%20(1).jpg)