The rapid development of AI in general and Large Language Models (LLM) in particular is driving the agenda for machine vision right now and will continue to do so in 2025.

This development presents new challenges by requiring massive data inputs and opens up new opportunities through the ability to process huge data outputs.

The importance of data is, of course, nothing new, but new technologies mean that the ability to collect, exchange, generate, and utilize large amounts of data will be crucial for creating cutting-edge machine vision solutions.

Vision data as part of a bigger picture

A good example of this is the trend of utilizing multiple data sources in production to piece together new insights - like pieces of a puzzle.

For example, we work with a customer who takes data from the vision system and combines it with several other data sources in the production line to make predictions about product quality and get clear answers on how to optimize production.

In this way, machine vision is no longer ‘just’ a question of defining whether a given item meets the set standards for product quality but rather part of an advanced and continuous optimization of the production process and an opportunity to predict errors and correct production in due time.

In principle, this could have been done before, but as it requires processing huge amounts of data, it has not been a viable or scalable option to do it on a handheld. However, the development of LLMs has changed that, because by using an LLM, it becomes possible to process large amounts of data and apply it to a practical production situation.

Seamless data exchange is key in this context, which is why we will have an increasing focus in our solutions on how to make our data easily accessible by delivering it in the necessary formats, for example, by following the OPC UA standard.

More data paves the way for a more agile production

Traditionally, one of the challenges of using machine learning in vision systems is that it takes time to adapt to changes in production because the network has to be trained on new data.

However, the ability to collect and manage large amounts of data also changes this. By having a large data pool that we can quickly train on, it's possible to calibrate the network to reflect changes in production. This allows for a much more agile production process.

Generating synthetic images for machine vision

One of the traditional hurdles on the road to widespread use of AI in machine vision is getting the necessary training data quickly and cost-effectively. That's why there will be a strong focus on solving this problem in 2025.

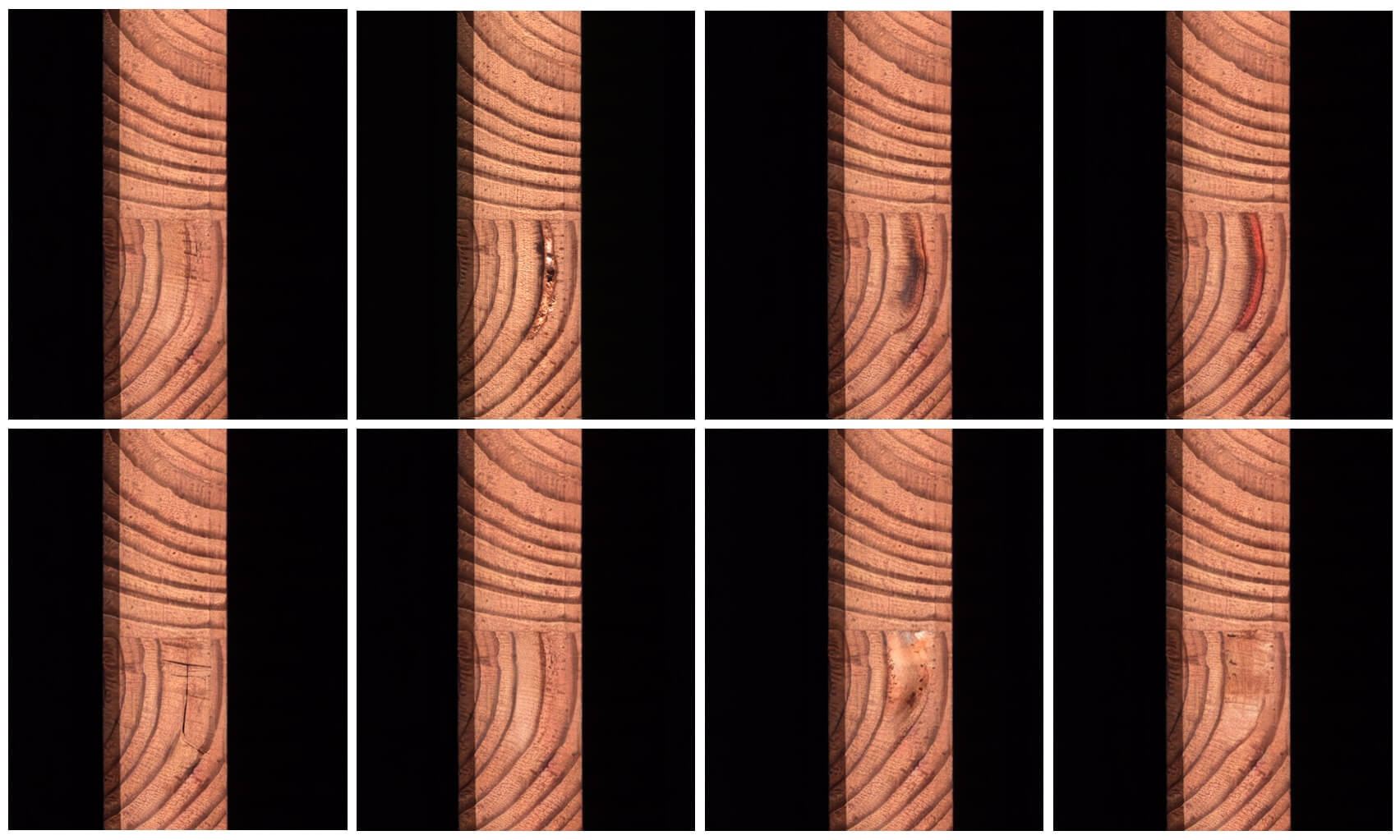

We are pursuing two strategies in this area. The first is to use deep learning to generate new images from existing images. You can see it as a further development of data augmentation, where we can now generate completely new data based on existing data.

The second strategy is to use 3D rendering software to create digital twins of our entire vision setup with camera and lights and the item to be inspected. By capturing material properties and defects from actual items, we can transfer them to our digital twin and use them to generate a large number of training images.

Although it is a relatively new technology, it is in use on customer projects, and we expect it to be a rapidly developing area by 2025.

New vision technologies emerging

In 2025, we will also see new vision technologies gaining ground. We have a special focus on event-based vision and hyperspectral imaging, among others.

Hyperspectral imaging is often connected to agriculture and the food industry, but we also see a range of possible applications within, for example, pharma.

Unlike traditional color imaging, which records light in just three color bands (red, green, and blue), hyperspectral imaging divides light into many more spectral bands (up to 900 bands), ranging from the visible to the short-wave infrared. This enables the detection of subtle differences in the chemical composition, material properties, and biological characteristics of objects.

Event-based vision works by only processing the dynamic changes in a scene - data is only recorded and transferred when a pixel changes. This improves processing speed and makes event-based vision ideal for applications that need to handle high-speed production and track rapid changes. It also opens up new possibilities for using machine vision in poor lighting conditions.

Move fast to stay ahead

Big improvements in both software and hardware are paving the way for new possibilities within machine vision in 2025, and at JLI, we will be dedicating a big chunk of our time to researching and testing new technologies to stay at the forefront of our fast-moving industry.