Machine Vision for 3D Inspection

3D/Robotics

JLI vision's 3D/Robotics Systems are solutions for measuring three dimensions, position estimation of objects, bin picking, and sampling.

The systems cover a wide range of production processes. All equipment is designed for the relevant environment.

What is 3D Inspection?

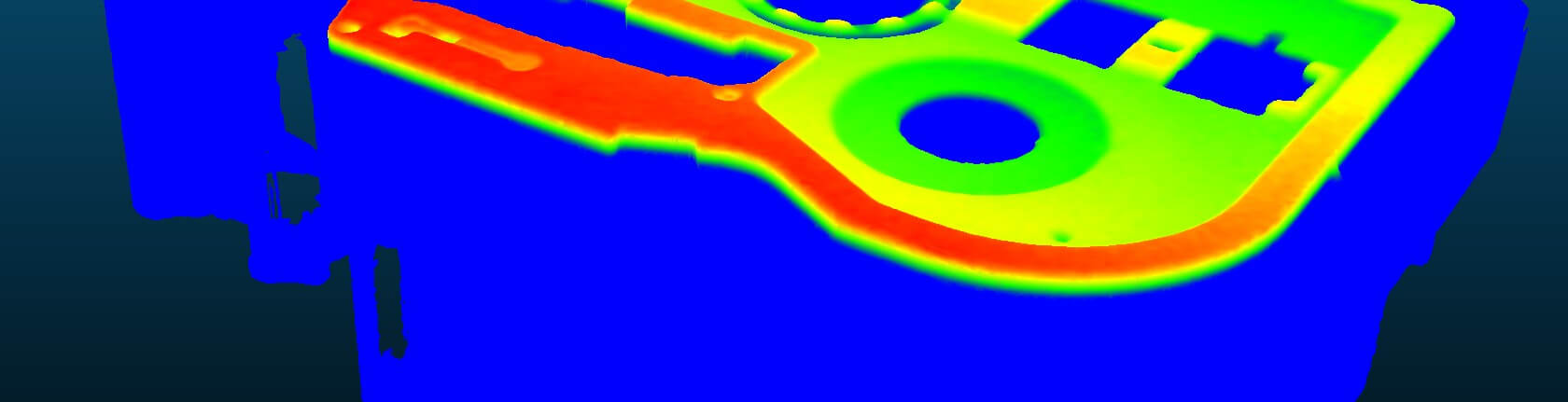

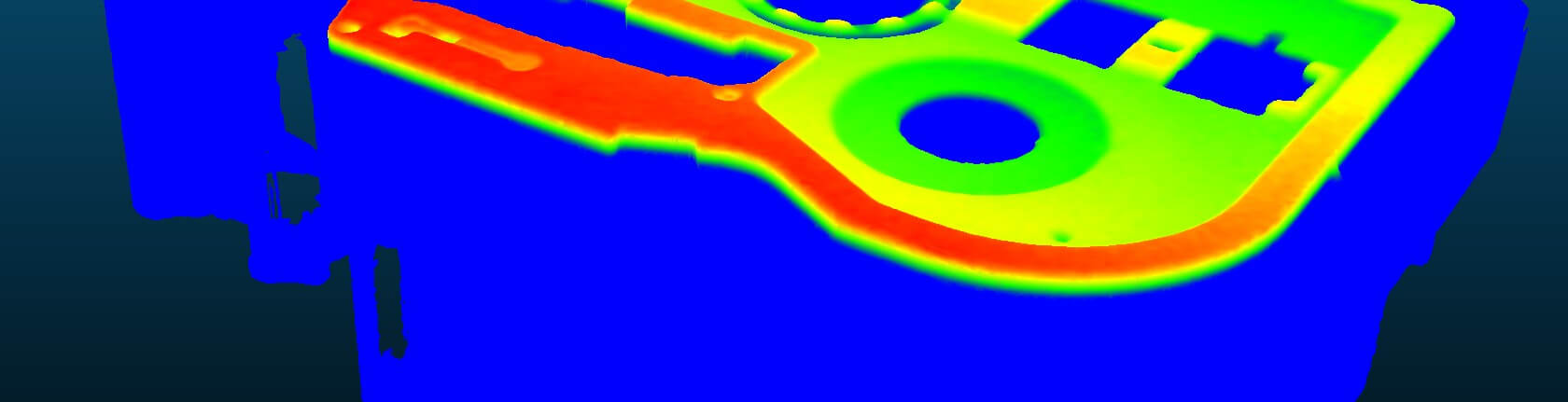

In some applications where a profile/contour of the object does not provide the right of information, 3D technology can be helpful.

With 3D you capture the shape of an object and gain an extra dimension - depth information. With 3D, you can measure geometric features on the surface of an object regardless of material or color.

In some setups, one of the advantages of 3D is that it is contrast invariant, and ideal for inspecting low-contrast objects. It is also more robust and immune to minor lighting variation or ambient light and there is high repeatability on measurements due to the fact that components often come in one package with camera, optics, lighting, and pre-calibration.

3D inspection offers the possibility of:

- Detecting surface height defects

- Volumetric measurement (X, Y, and Z-axis) provides shape and position-related parameters

- Helping robots pick up objects randomly located in a bin – automating factory processes

- It also helps guide and move robots around the world autonomously.

Why choose the JLI 3D/Robotics Inspection System?

- Custom made

- Adapted to existing production lines or robot manufacturer

- Online access for remote support

Book a meeting to learn more

.png)

Send me an email at hb@jlivision.com

or book a meeting, and let's find out how we can help you.

Glass Inspection

Glass Inspection

.svg) Medical Device Inspection

Medical Device Inspection

Steel Inspection

Steel Inspection

.svg) Wood Inspection

Wood Inspection

.svg) Building Industries

Building Industries

Packaging Inspection

Packaging Inspection

Plastic Inspection

Plastic Inspection

Standard Vision Systems

Standard Vision Systems

.svg) Customized Vision Systems

Customized Vision Systems

3D / Robotics

3D / Robotics

.svg) Surface Inspection

Surface Inspection

Color Inspection

Color Inspection

Consulting

Consulting